Multiverse Computing launches CompactifAI API for AWS model compression

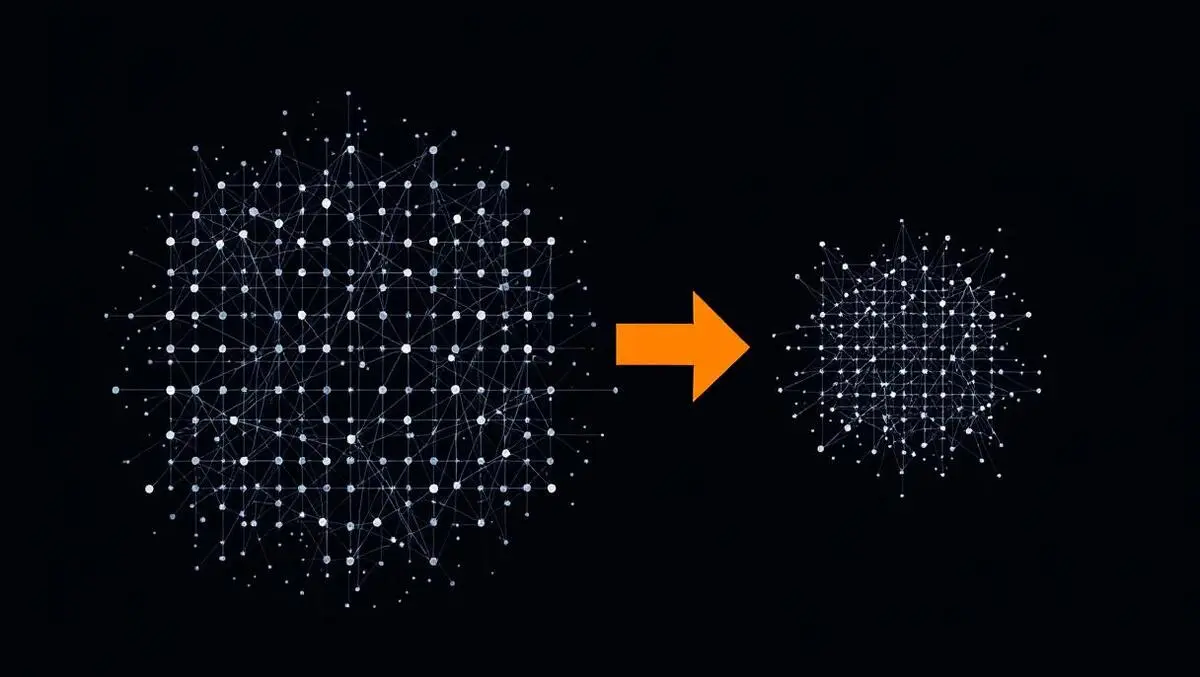

Multiverse Computing has launched its CompactifAI Application Programming Interface (API) for pre-compressing and optimising large AI models on AWS.

The CompactifAI API follows more than a year of development, testing and planning by the company, including participation in the AWS Generative AI Accelerator Program for 2024. This marks the company's first solution designed to provide scalable, cost-effective, and user-friendly large language model (LLM) deployment using AWS infrastructure.

Now available in AWS Marketplace, the CompactifAI API offers a serverless LLM access layer, leveraging AWS Sagemaker Hyperpod. This approach allows the efficient deployment of compressed models and supports inference across hundreds of GPUs for mass scalability. Through its involvement with AWS, Multiverse Computing focused on refining the performance and cost-efficiency of top-tier AI models, creating a straightforward onboarding process, and aligning its distribution strategy for broad market access.

The API currently supports compressed versions of models including Meta Llama, DeepSeek and Mistral, which are now optimised for performance and resource efficiency. These compressed models have been developed to help clients deploy LLM applications more effectively and at reduced operational costs, addressing ongoing industry concerns about compute demands and environmental impacts as AI becomes more widely used.

Ruben Espinosa, Chief Technology Officer of the AI assistant Luzia, commented on the role of CompactifAI in their system:

"Integrating CompactifAI's compressed models into our customer support chatbot has been a game changer. We have reduced our model footprint by over 50% while maintaining high response quality with lower latency and cost. The performance efficiency of the compressed model lets us deliver faster, more reliable interactions to our users across multiple regions, without compromising on natural language understanding"

Clients using the API are able to select from a portfolio of models tailored to varying performance and budget requirements, helping meet the operational needs of organisations across banking, automotive, chemicals, and other sectors. Multiverse Computing's customer base includes companies such as Rolls Royce, Santander, BASF, Mercedes, and Allianz.

Chief Executive Officer and Co-Founder Enrique Lizaso provided further perspective on the significance of this release:

"This isn't just a product launch - it's the culmination of a strategic journey. We've built CompactifAI API from the ground up to revolutionise AI deployment with top performance and cost effective solutions, and we're thrilled to bring it to market with AWS."

Jon Jones, Vice President and Global Head of Startups and Venture Capital at AWS, also noted the potential impact of the solution for other businesses:

"Multiverse Computing's CompactifAI API will help more businesses to make use of advanced AI capabilities with accessible options for optimising models to their requirements. Innovations like these can play a valuable role in democratising access to AI and helping a wide range of businesses to apply the technology."

Multiverse Computing's utilisation of cloud technologies enables it to deliver a scalable and secure API infrastructure for compressed AI model deployment. The company aims to offer models in a user-oriented format, supported by comprehensive documentation, clear licensing information, and an onboarding process integrated directly with AWS Marketplace.

The CompactifAI API landing page provides a range of resources for users, including detailed model cards, pricing and performance comparisons, comprehensive documentation and clear licensing terms, as well as side-by-side comparison tools to help customers select the most suitable compressed model for their needs.

By offering LLM compression of up to 95% with a typical accuracy loss of only 2-3%, Multiverse Computing aims to address concerns related to infrastructure cost and sustainability while widening access to advanced AI in a range of sectors. The company has clients in the UK and globally, and reports over 100 customers across industries such as energy, banking, and manufacturing.